He released many lectures covering many topics such as: building neural networks from scratch, natural language processing starting from a probabilistic model and more!

This is the first video in the series: “The spelled-out intro to neural networks and backpropagation: building micrograd” (link)

“We are going to start with a blank jupyter notebook and by the end of the lecture, we will define and train a neural net. And you will get to see everything that goes on under the hood and exactly how that works on an intuitive level”

Andrej Karpathy

Chapter notes: micrograd overview (00:00:24)

- micrograd – an Autograd engine

- “automated gradient”

- -> implements backprop

- Backprop

- -> allows calculation gradients for NN nodes

- -> allows iterative tuning to minimize loss

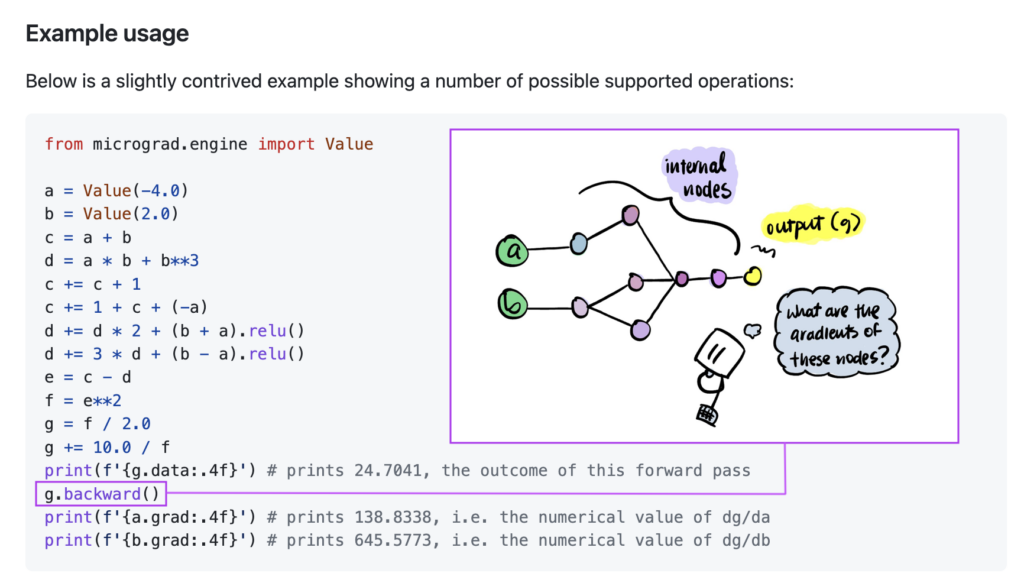

“micrograd” allows you to build mathematical expressions.

Here “a” and “b” are inputs wrapped in Value objects. As we perform math operations micrograd will keep and internal representation and allows us to call .backwards which evaluates the derivatives of g with respect to all internal nodes

Remember: Neural networks are just a mathematical expression. They take input data and weights of the neural network as an input and the output are your predictions or your loss function.

Backpropagation is significantly more general, it doesn’t care about neural networks at all, it deals with arbitrary math expressions and we happen to use it for training neural networks.

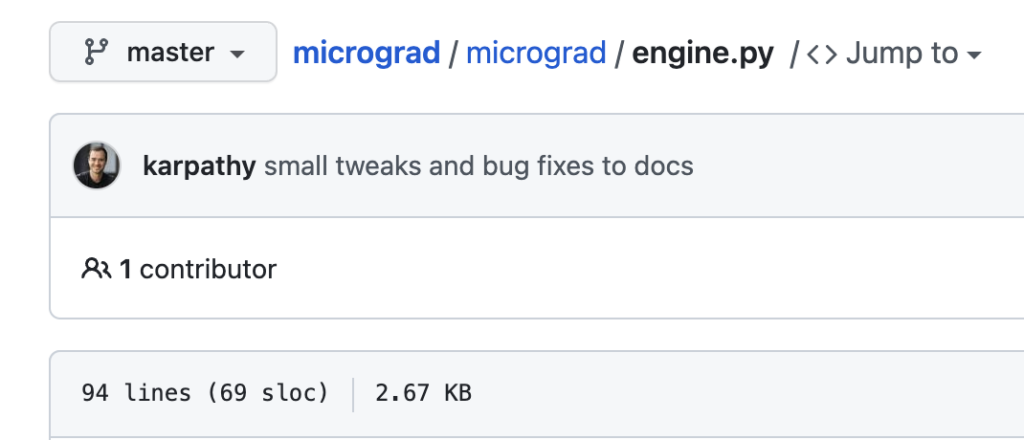

Note: engine.py (94 lines) and nn.py (60 lines) are tiny files so we can build this all in one lecture!

Have fun 😃